TeachMyAgent: a Benchmark for Automatic Curriculum Learning in Deep RL

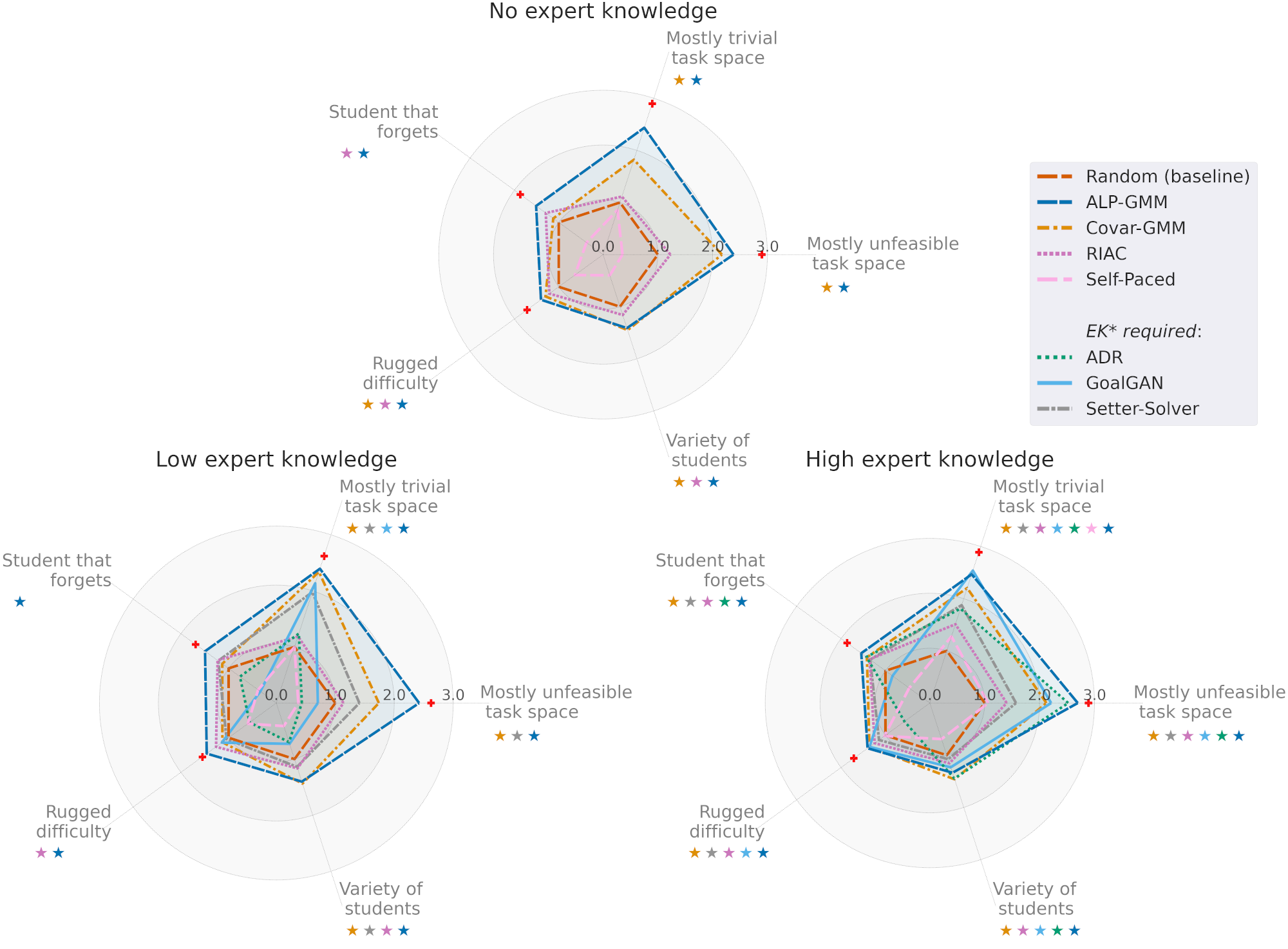

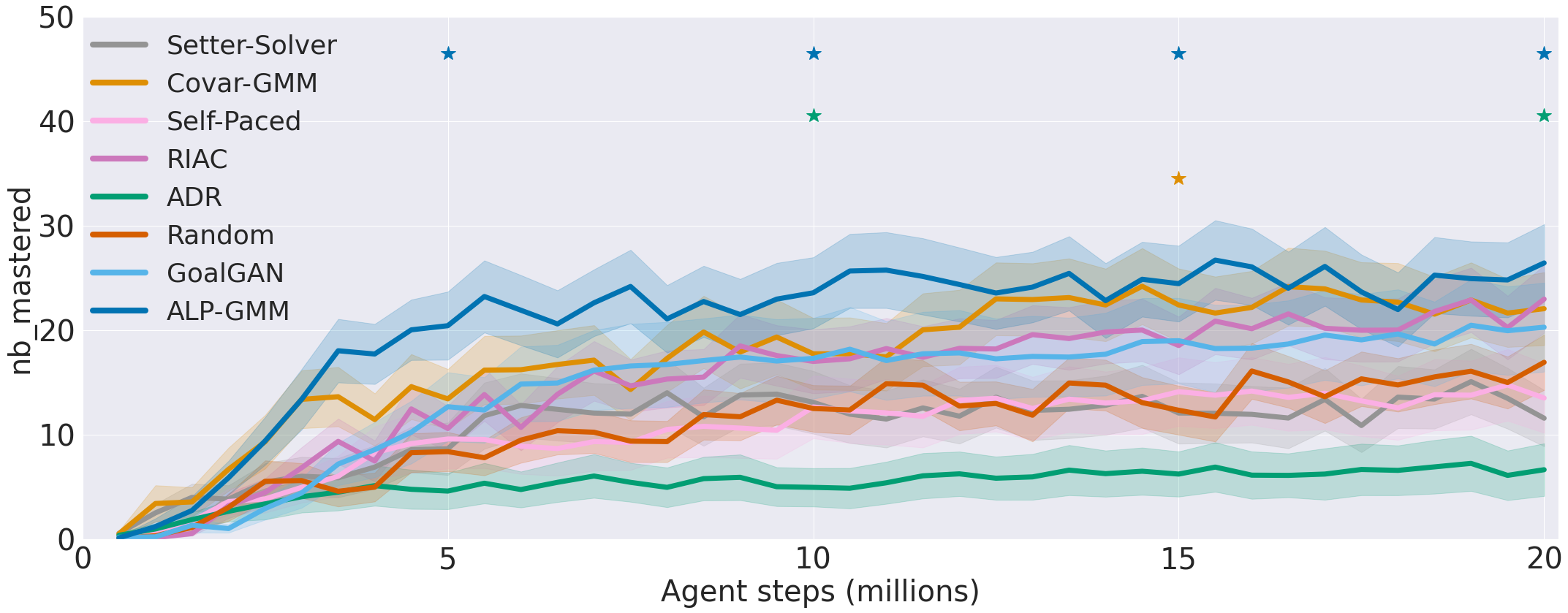

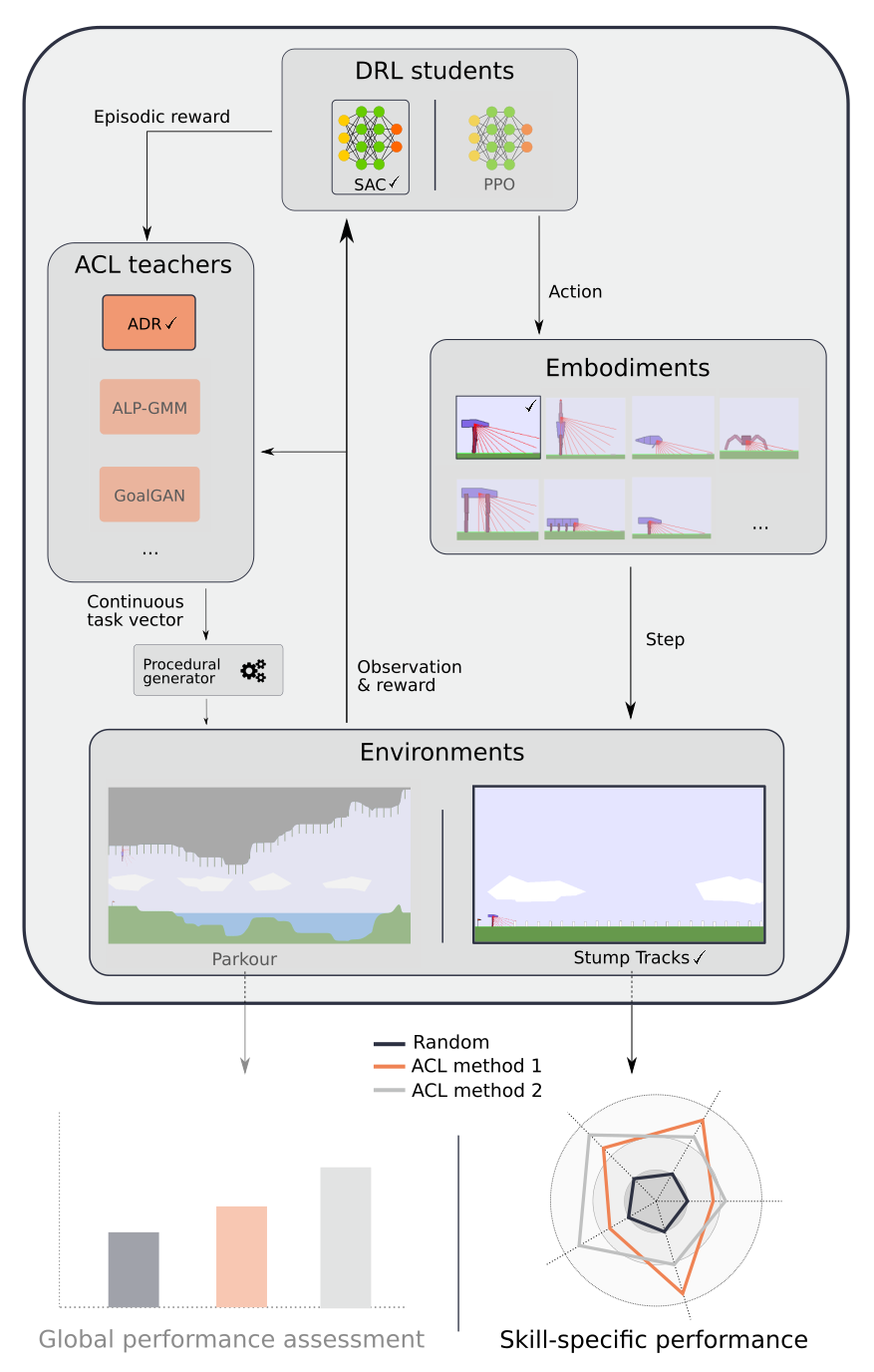

TeachMyAgent is a testbed platform for Automatic Curriculum Learning (ACL) methods in Deep Reinforcement Learning (Deep RL). We leverageprocedurally generated environments to assess the performance of teacher algorithms in continuous task spaces. We provide tools for systematic study and comparison of ACL algorithms using both skill-specific and global performance assessment.

We release our platform as an open-source repository along with APIs allowing one to extend our testbed. We currently provide the following elements:

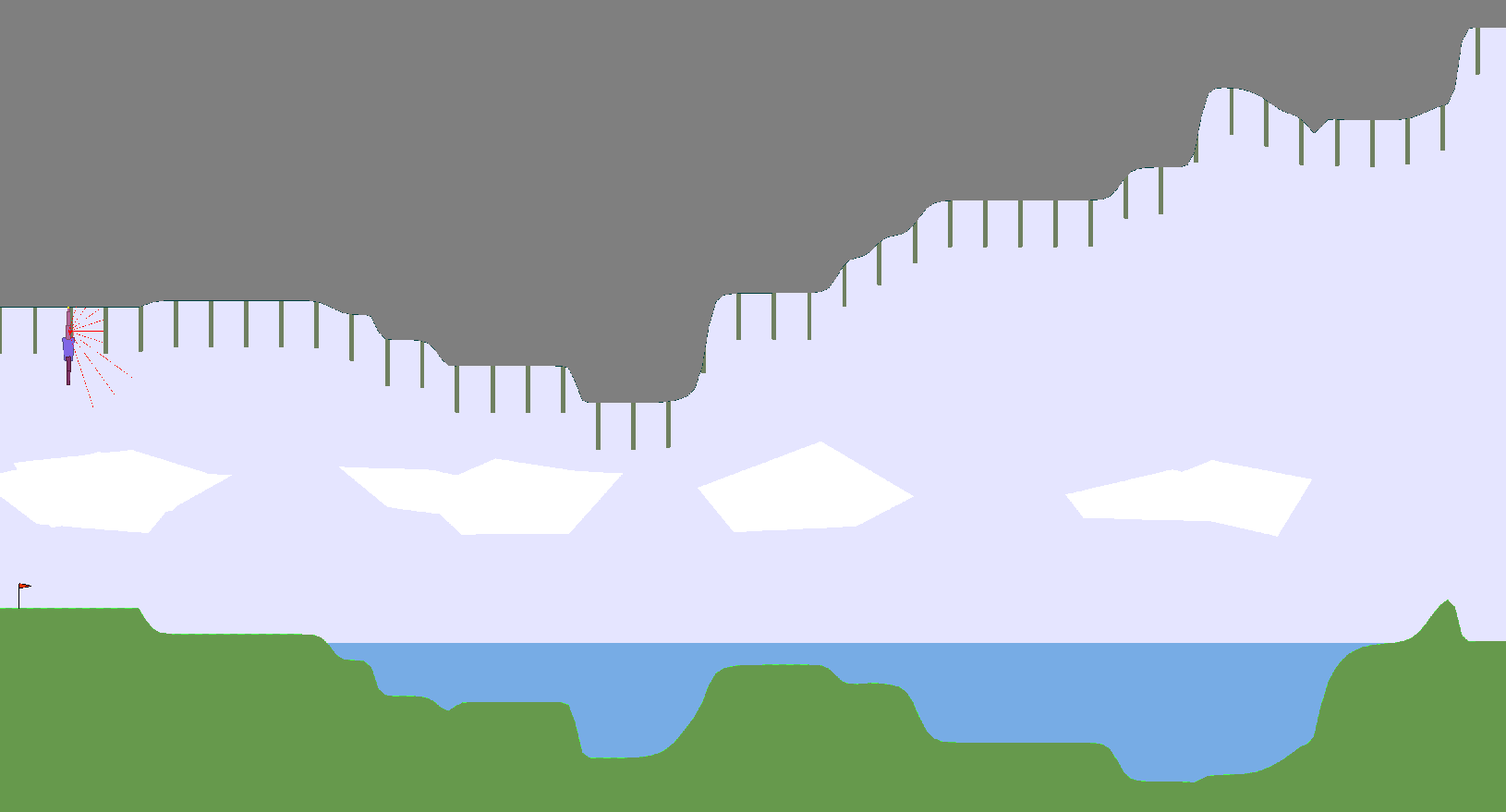

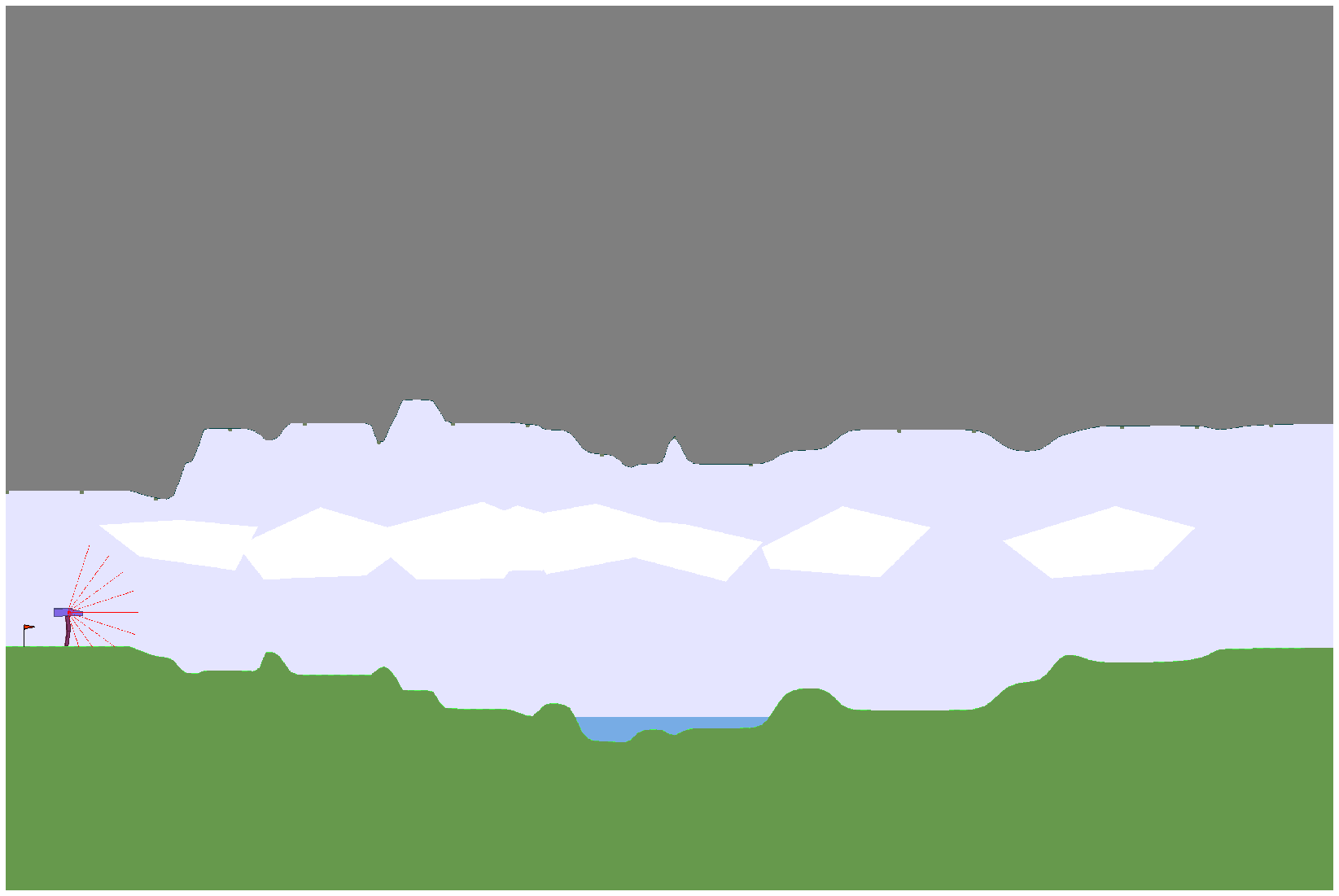

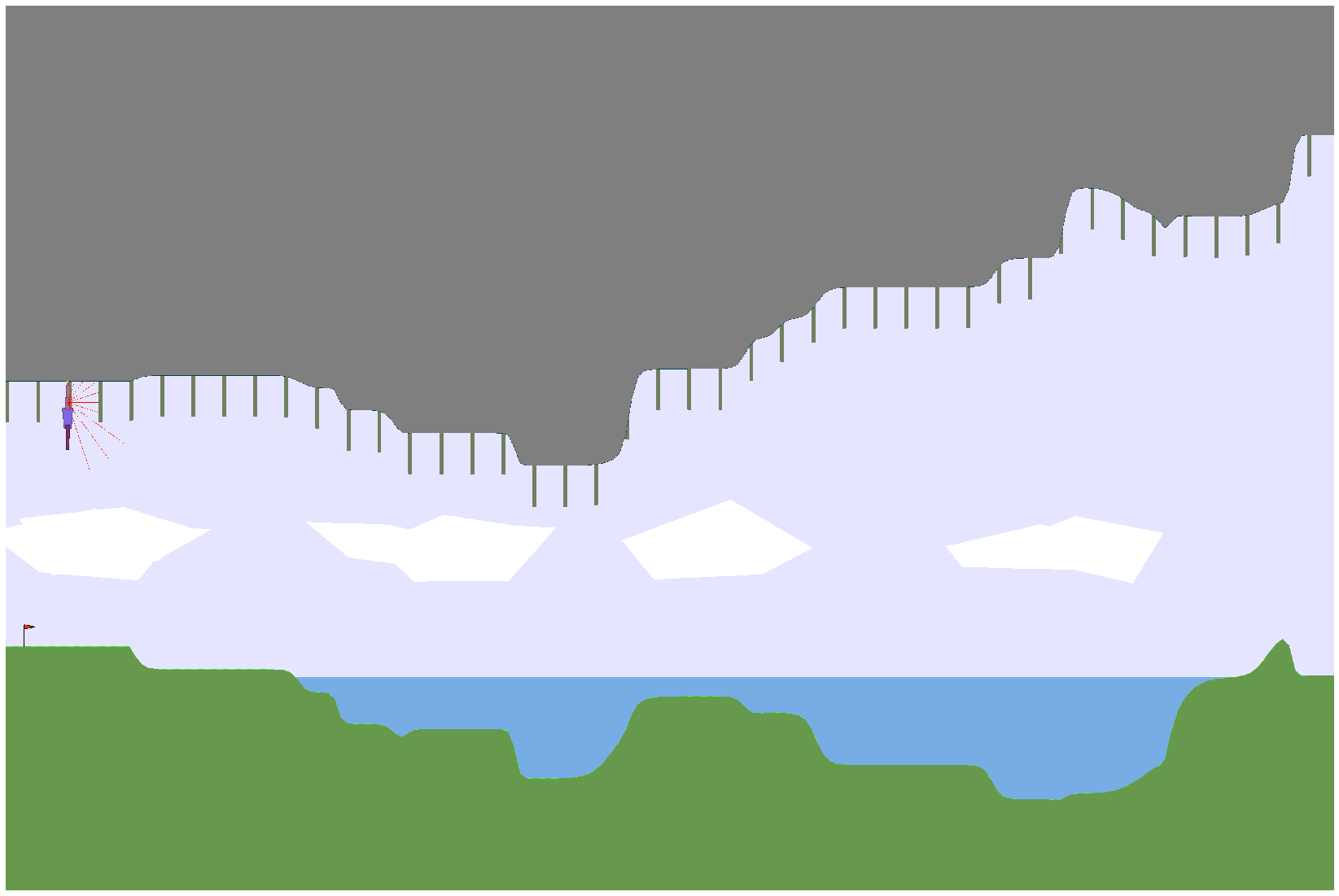

- Two parametric Box2D environments: Stump Tracks (an extension of this environment) and Parkour

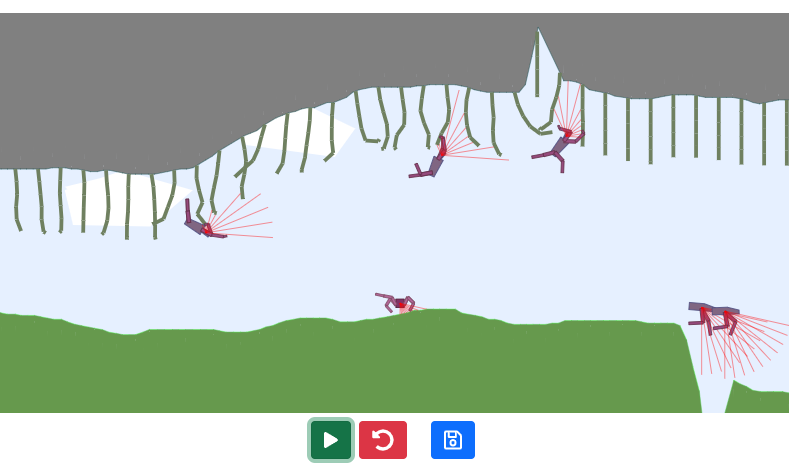

- Multiple embodiments with different locomotion skills (e.g. bipedal walker, spider, climbing chimpanzee, fish)

- Two Deep RL students: SAC and PPO

- Several ACL algorithms: ADR, ALP-GMM, Covar-GMM, SPDL, GoalGAN, Setter-Solver, RIAC

- Two benchmark experiments using elements above: Skill-specific comparison and global performance assessment

- A notebook for systematic analysis of results using statistical tests along with visualisation tools (plots, videos...)

Introducing new morphologies

We introduce new embodiments for our Deep RL agents to interact with environments. We create three types of morphology:

- Walkers

- Swimmers

- Climbers

Additionally, we introduce new physics (water, graspable surfaces) in our environments allowing to make each type of embodiment have a preferred "milieu" (e.g. walkers cannot survive underwater). These new embodiments along with our new physics create agents requiring novel locomotion such as climbing or swimming. We give a detailed presentation of our morphologies on this page, as well as some policies we managed to learn using a Deep RL learner.